I am a Research Fellow at the University of Oxford, working with ʻŌiwi Parker Jones and Philip Torr. I am also a member of the ELLIS Society. I am passionate about advancing multimodal machine learning by creating models that are adaptable and trustworthy.

I earned my PhD in Artificial Intelligence at the University of Amsterdam, advised by Maarten de Rijke and Paul Groth. Before that, I obtained a MSc in AI from KU Leuven where I was advised by Marie-Francine Moens.

During my academic journey, I spent time at Microsoft Research, the Gemini team, Bloomberg AI, Amazon Science, LIIR at KU Leuven, and ETH Zurich as an intern. Alongside my research, I hold leadership roles in initiatives focused on diversity and inclusion in machine learning, including organizing the WiML Mentorship Program, serving as General Chair of WiML at ICML 2025, and mentoring through the ELLIS Inclusive AI initiative.

UpdatesPublications

Milestones

|

Research |

|

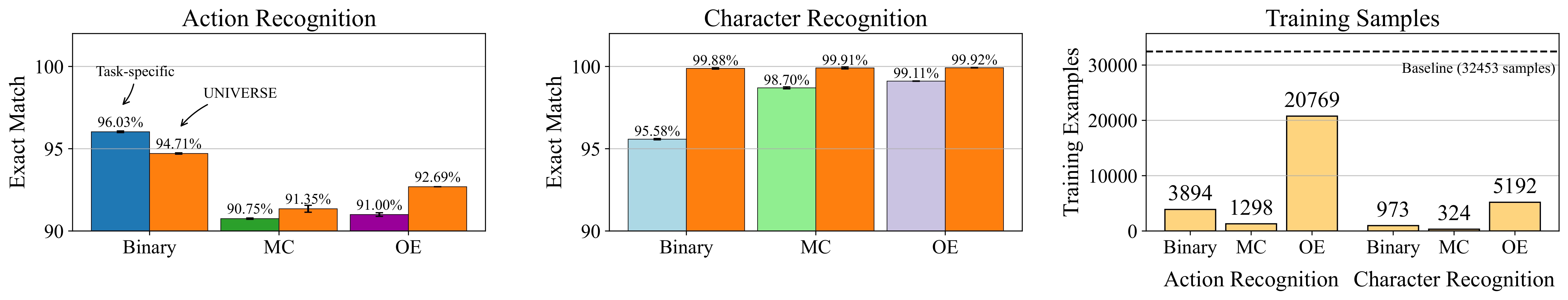

Mariya Hendriksen, Tabish Rashid, David Bignell, Raluca Georgescu, Abdelhak Lemkhenter, Katja Hoffman, Sam Devlin*, Sarah Parisot* Oral at NeurIPS LAW 2025; Under submission arXiv / GitHub We focus on the challenge of automated evaluation for world model rollouts and introduce a structured semantic protocol for this domain. We also propose UNIVERSE, a method for efficient adaptation of VLMs to this scenario. |

|

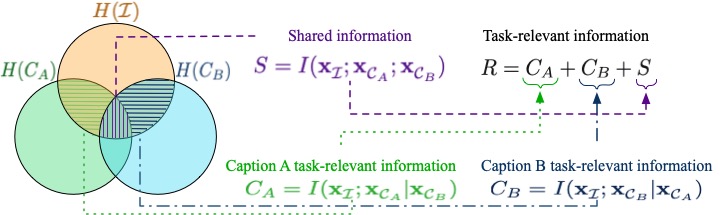

Maurits Bleeker*, Mariya Hendriksen*, Andrew Yates, Maarten de Rijke TMLR, 2024 arXiv / bibtex / GitHub We propose a framework to examine the shortcut learning problem in the context of Vision-Language contrastive representation learning with multiple captions per image. We show how this problem can be partially mitigated using a form of text reconstruction and implicit feature modification. |

|

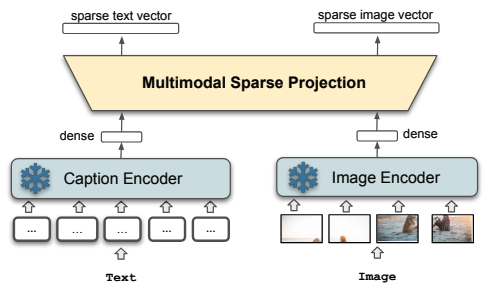

Thong Nguyen*, Mariya Hendriksen*, Andrew Yates, Maarten de Rijke ECIR, 2024 arXiv / GitHub We propose a framework for multimodal learned sparse retrieval. |

|

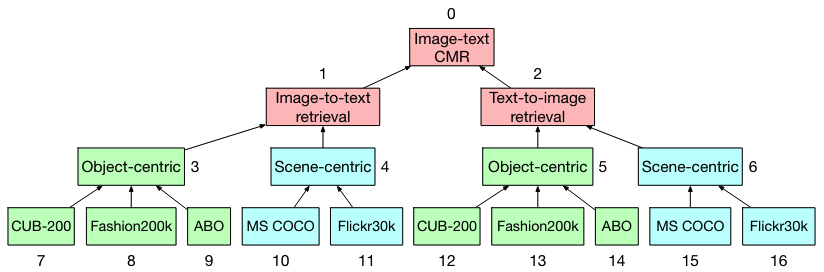

Mariya Hendriksen, Svitlana Vakulenko, Ernst Kuiper, Maarten de Rijke ECIR, 2023 arXiv / GitHub |

|

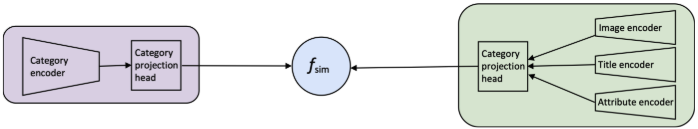

Mariya Hendriksen, Maurits Bleeker, Svitlana Vakulenko, Nanne van Noord, Maarten de Rijke ECIR, 2022 arXiv / GitHub |

|

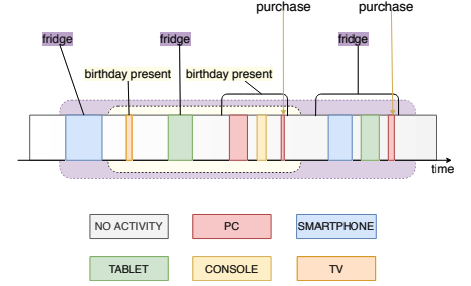

Mariya Hendriksen, Ernst Kuiper, Pim Nauts, Sebastian Schelter, Maarten de Rijke SIGIR eCom, 2020 arXiv / related work |